mirror of

https://codeberg.org/Mo8it/How_To_Linux.git

synced 2025-03-31 22:43:55 +00:00

Organize days content

This commit is contained in:

parent

55bf9ed17b

commit

c30081a00d

14 changed files with 843 additions and 900 deletions

|

|

@ -1,6 +1,6 @@

|

|||

# Tasks

|

||||

|

||||

## Task 0: Collective-Score

|

||||

## Task: Collective-Score

|

||||

|

||||

This course uses [collective-score](https://codeberg.org/mo8it/collective-score) for interactive tasks.

|

||||

I spent many days programming it for you 😇

|

||||

|

|

@ -42,7 +42,7 @@ Congratulations, you have done your first task 🎉

|

|||

|

||||

To see your progress at any time, run the command **`cs progress show`**.

|

||||

|

||||

## Task 1: Building houses 🏠️

|

||||

## Task: Building houses 🏠️

|

||||

|

||||

In this task, you will build a house with different rooms using (nested) directories.

|

||||

|

||||

|

|

@ -92,7 +92,7 @@ Tipps:

|

|||

- If you get lost, use `pwd`.

|

||||

- If you are looking for an option but you can not remember it, use `--help`.

|

||||

|

||||

## Task 2: Reset your password

|

||||

## Task: Password 🔑

|

||||

|

||||

⚠️ Launch the [fish shell](https://fishshell.com/) by running the command `fish` and stay in it for all following tasks.

|

||||

If you close the terminal and lauch it later again, you have to run `fish` again.

|

||||

|

|

@ -102,7 +102,7 @@ Use the command `passwd` to reset the password of your user. It is important to

|

|||

|

||||

🟢 Run `cs task passwd`.

|

||||

|

||||

## Task 3: Update your system

|

||||

## Task: System updates

|

||||

|

||||

Find out how to update your system with `dnf` and run the updates.

|

||||

|

||||

|

|

@ -112,7 +112,7 @@ It is possible that you don't find any updates. In this case, you can try it aga

|

|||

|

||||

🟢 Run `cs task system-update`.

|

||||

|

||||

## Task 4: Package installation and usage

|

||||

## Task: Package installation and usage

|

||||

|

||||

Install the package `cowsay` and find out how to use it!

|

||||

|

||||

|

|

|

|||

47

src/day_2/clis_of_the_day.md

Normal file

47

src/day_2/clis_of_the_day.md

Normal file

|

|

@ -0,0 +1,47 @@

|

|||

# CLIs of the day

|

||||

|

||||

## Cargo

|

||||

|

||||

```bash

|

||||

# Install. openssl-devel needed for cargo-update

|

||||

sudo dnf install cargo openssl-devel

|

||||

|

||||

# To be able to run cargo install-update -a

|

||||

cargo install cargo-update

|

||||

|

||||

# Install crate (package)

|

||||

cargo install CRATENAME

|

||||

|

||||

# Update installed crates

|

||||

cargo install-update -a

|

||||

```

|

||||

|

||||

## curl

|

||||

|

||||

We did use `curl`, but not yet for downloading.

|

||||

|

||||

```bash

|

||||

# Download file into current directory while using the default name

|

||||

curl -L LINK_TO_FILE -O

|

||||

|

||||

# Download file while giving the path to save the file into

|

||||

# (notice that we are using small o now, not O)

|

||||

curl -L LINK_TO_FILE -o PATH

|

||||

```

|

||||

|

||||

`-L` tells `curl` to follow redirections (for example from `http` to `https`).

|

||||

|

||||

## xargs

|

||||

|

||||

```bash

|

||||

# Show the content of all files starting with '*.sh'

|

||||

find . -type f -name '*.sh' | xargs cat

|

||||

```

|

||||

|

||||

## ripgrep

|

||||

|

||||

```console

|

||||

$ rg '.*,(.*),.*' -r '$1' demo.txt

|

||||

```

|

||||

|

||||

## jq

|

||||

21

src/day_2/regex.md

Normal file

21

src/day_2/regex.md

Normal file

|

|

@ -0,0 +1,21 @@

|

|||

# Regex

|

||||

|

||||

**Reg**ular **ex**pressions

|

||||

|

||||

Can be used for example with `grep`, `rg`, `find`, `fd`, `nvim`, etc.

|

||||

|

||||

- `^`: Start of line

|

||||

- `$`: End of line

|

||||

- `()`: Group

|

||||

- `[abcd]`: Character set, here `a` until `d`

|

||||

- `[a-z]`: Character range, here `a` until `z`

|

||||

- `[^b-h]`: Negated character range, here `b` to `h`

|

||||

- `.`: Any character

|

||||

- `.*`: 0 or more characters

|

||||

- `.+`: 1 or more characters

|

||||

- `\w`: Letter or number

|

||||

- `\W`: Not letter nor number

|

||||

- `\d`: Digit

|

||||

- `\D`: Not digit

|

||||

- `\s`: Whitespace

|

||||

- `\S`: Not whitespace

|

||||

31

src/day_2/shell_tricks.md

Normal file

31

src/day_2/shell_tricks.md

Normal file

|

|

@ -0,0 +1,31 @@

|

|||

# Shell tricks

|

||||

|

||||

## Expansion

|

||||

|

||||

```bash

|

||||

# mkdir -p dir/sub1 dir/sub2

|

||||

mkdir -p dir/sub{1,2}

|

||||

|

||||

# touch dir/sub1/file1.txt dir/sub1/file2.txt

|

||||

touch dir/sub1/file{1,2}.txt

|

||||

|

||||

# cp dir/sub1/file1.txt dir/sub1/file1.txt

|

||||

cp dir/sub1/file1.txt{,.bak}

|

||||

```

|

||||

|

||||

## Globbing

|

||||

|

||||

```bash

|

||||

# Print content of all files ending with `.sh`

|

||||

cat *.sh

|

||||

|

||||

# Move all files visible files and directories from dir1 to dir2

|

||||

mv dir1/* dir2

|

||||

|

||||

# Move all hidden files and directories from dir1 to dir2

|

||||

mv dir1/.* dir2

|

||||

|

||||

# Move all visible and hidden files and directories from dir1 to dir2

|

||||

# mv dir1/* dir1/.* dir2

|

||||

mv dir1/{,.}* dir2

|

||||

```

|

||||

|

|

@ -2,99 +2,86 @@

|

|||

|

||||

Organize the files and directories of your tasks in separate directories!

|

||||

|

||||

## Task: Job scheduler

|

||||

## Task: Cargo

|

||||

|

||||

> Warning ⚠️ : This task is not an easy task. Don't give up quickly and ask for help if you don't get further!

|

||||

Use `cargo` to install the following crates:

|

||||

|

||||

In this task, we want to write our own job scheduler.

|

||||

- cargo-update

|

||||

- tealdeer

|

||||

|

||||

Understanding how job schedulers work is important when you are working on a computer cluster.

|

||||

It might take a long time to compile everything.

|

||||

|

||||

Computer clusters are shared by many users. Therefore, running jobs on a cluster has to be scheduled to make sure that the resources are shared probably.

|

||||

Add `$HOME/.cargo/bin` to your `PATH`.

|

||||

|

||||

In this task, we will keep it simple. No aspects of multiple users or any optimizations.

|

||||

`cargo-update` should be installed to be able to run `cargo install-update -a` to update all installed crates. Try running the command. But you should not find any updates since you did just install the crates.

|

||||

|

||||

We want to be able to submit a job as a single script (without any dependencies). The submitted scripts should run one after the another to save CPU usage for example.

|

||||

The crate `tealdeer` provides you with the program `tldr`.

|

||||

|

||||

We will use the program `inotifywait`. This program can monitor a directory and notify on changes within this directory.

|

||||

Run `tldr --update`. Now run the following two commands:

|

||||

|

||||

1. Find out which package installs `inotifywait` and install it.

|

||||

1. Read the manual of `inotifywait` for a better understanding of what it does.

|

||||

1. Find out how to tell `inotifywait` to keep monitoring a directory and not exit after the first event.

|

||||

1. Find out what events mean in the context of `inotifywait`.

|

||||

1. Create a new directory called `jobs` to be monitored.

|

||||

1. Create a new directory called `logs` that will be used later.

|

||||

1. Run `inotifywait` while telling it to monitor the directory `jobs`. Leave the command running in a terminal and open a second terminal (tab) to continue the work in.

|

||||

1. Create a file **outside** of the directory `jobs` and then copy it to the directory `jobs`.

|

||||

1. Go back to the first terminal and see the output of `inotifywait` was.

|

||||

1. Based on the output, choose an event that you want to listen to with `inotifywait` that tells you when a file is _completely_ added to the directory `jobs`. Use the manual to read more about specific events.

|

||||

1. Find an option that lets you tell `inotifywait` to only notify when the chosen event occurs.

|

||||

1. Find an option that lets you format the output of the notification of `inotifywait`. Since we only listen on one event and monitor only one directory, an output that shows only the name of the new file should be enough.

|

||||

1. Enter the command that you have until now in a script. Now extend it by using a `while` loop that continuously listens on the notifications of `inotifywait`. Use the following snippet while replacing the sections with `(...)`:

|

||||

```bash

|

||||

inotifywait (...) | while read FILENAME

|

||||

do

|

||||

tldr dnf

|

||||

tldr apt

|

||||

```

|

||||

|

||||

It should be obvious to you what `tldr` does after you run the commands. Try it with other programs than `dnf` and `apt`!

|

||||

|

||||

## Task: ripgrep

|

||||

|

||||

The following website uses a PDF file but it does not let you see download it: [https://knowunity.de/knows/biologie-neurobiologie-1c6a4647-4707-4d1b-8ffb-e7a750582921](https://knowunity.de/knows/biologie-neurobiologie-1c6a4647-4707-4d1b-8ffb-e7a750582921)

|

||||

|

||||

Know that you are kind of a "hacker", you want to use a workaround.

|

||||

|

||||

Use pipes `|`, `curl`, `rg` (ripgrep) and `xargs` to parse the HTML of the website, extract the link to the PDF file and download the file.

|

||||

|

||||

The link to the PDF file starts with `https://` and ends with `.pdf`.

|

||||

|

||||

After that it works, write a script that asks the user for the link to a document at [knowunity.de](https://knowunity.de) and downloads the PDF file.

|

||||

|

||||

## Task: Cows everywhere!

|

||||

|

||||

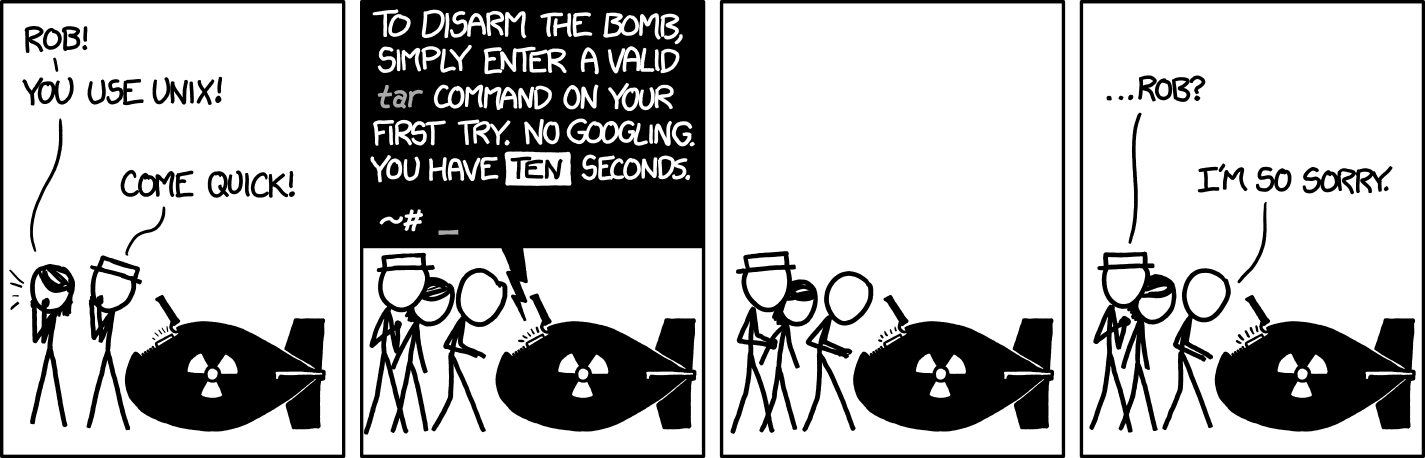

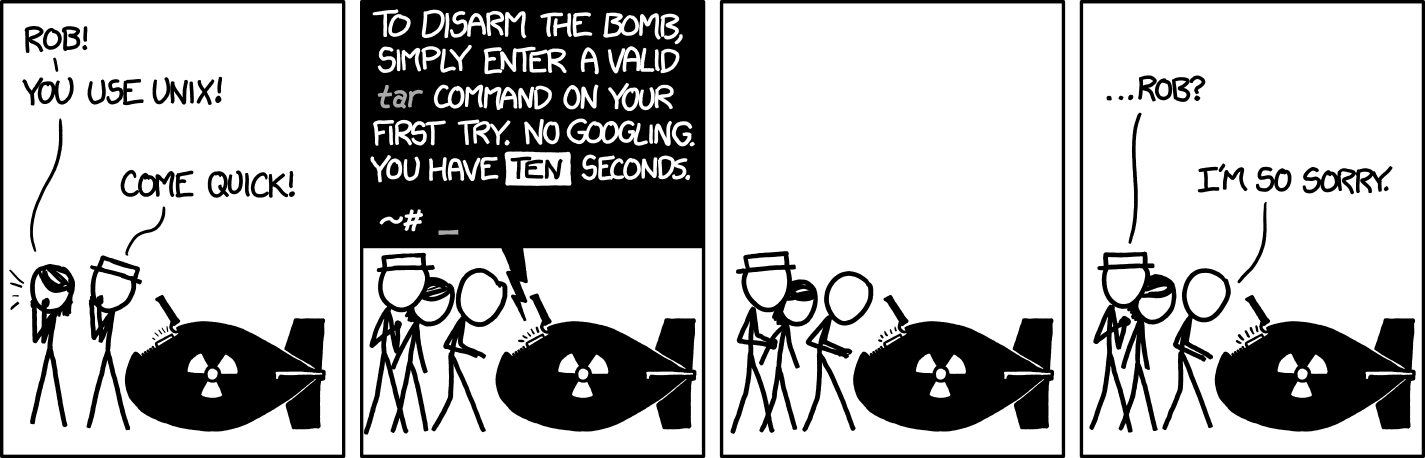

[](https://xkcd.com/1168/)

|

||||

|

||||

Download the source code of this book using `curl` as a `tar.gz` archive: [https://codeberg.org/Mo8it/How\_To\_Linux/archive/main.tar.gz](https://codeberg.org/Mo8it/How_To_Linux/archive/main.tar.gz)

|

||||

|

||||

We are not using Git at this point to practice dealing with archives.

|

||||

|

||||

Extract the files from the archive! (_Don't worry, you have more than 10 seconds_)

|

||||

|

||||

Use `find` to find all Markdown files (ending with `.md`). Then use `sed` to replace every match of `echo` with `cowsay` in the files found.

|

||||

|

||||

Why? Easy: Why not?

|

||||

|

||||

## Task: Parsing a CSV file

|

||||

|

||||

1. Use `curl` to take a look at the file with the following link: [https://gitlab.rlp.net/mobitar/julia\_course/-/raw/main/Day\_3/resources/fitting\_task\_data.csv](https://gitlab.rlp.net/mobitar/julia_course/-/raw/main/Day_3/resources/fitting_task_data.csv). The file contains measurement of a (fake) free fall experiment.

|

||||

1. Now that you know what the file contains, pipe the output to tools that let you remove the first 6 and last 2 lines. Afterwards, extract the first (measured height) and third column (measured time).

|

||||

1. Write a small Python that processes the output of the command from the last step. Since this book is not about programming in Python or plotting, the simple code to process the output of the variable `h_t` is given below:

|

||||

|

||||

```

|

||||

#!/usr/bin/env python3

|

||||

|

||||

import matplotlib.pyplot as plt

|

||||

|

||||

(...)

|

||||

done

|

||||

```

|

||||

1. After a notification, the body of the `while` loop should first print the name of the script that was added. From now on, we only want to add scripts to the `jobs` directory.

|

||||

1. After printing the script name, run the script!

|

||||

1. Save the standard output and standard error of the script into two separate files in the `logs` directory. If the name of the script is `job.sh` for example, then the output should be in the files `logs/job.sh.out` and `logs/job.sh.err`.

|

||||

|

||||

##### Tipps:

|

||||

h_values = []

|

||||

t_values = []

|

||||

|

||||

- Take a look at the examples from the sections of this day.

|

||||

- Take care of permissions.

|

||||

for line in h_t.strip().split("\n"):

|

||||

h, t = line.strip().split(",")

|

||||

h_values.append(h)

|

||||

t_values.append(t)

|

||||

|

||||

If you have extra time, read about the command `screen` in the internet. `screen` allows you to run commands in the background. This way, you don't need two terminals.

|

||||

|

||||

## Task: Job submitter

|

||||

|

||||

In this task we will write a small script that lets us submit a job script to the scheduler from the last task.

|

||||

|

||||

The script should take the path to the job script as a single required argument.

|

||||

|

||||

The script should then copy the job script to the directory `jobs` while adding the time and date to the beginning of the name of the job script in the `jobs` directory.

|

||||

|

||||

Read the manual of the command `date` to know how to get the time and date in the following format: `2022-08-22T20:00:00+00:00`.

|

||||

|

||||

If the name of the job script is `job.sh` for example, the job script should be named `2022-08-22T20:00:00+00:00_job.sh` in the `jobs` directory.

|

||||

|

||||

Use variables to write the script to make it more understandable.

|

||||

|

||||

#### Help

|

||||

|

||||

To save the output of a command into a variable, use you have to use the following syntax:

|

||||

|

||||

```bash

|

||||

DATE=$(date ...)

|

||||

plt.plot(h_values, t_values)

|

||||

plt.xlabel("h")

|

||||

plt.ylabel("t")

|

||||

plt.savefig("h_t.pdf")

|

||||

```

|

||||

|

||||

Replace `...` with your code.

|

||||

|

||||

To read the `n`-th argument that is provided to a script you write, you have to use `$n`.

|

||||

|

||||

Example script called `arg.sh`:

|

||||

|

||||

```bash

|

||||

#!/usr/bin/bash

|

||||

|

||||

echo "The first argument is: $1"

|

||||

```

|

||||

|

||||

When you run this script with an argument:

|

||||

|

||||

```console

|

||||

$ ./arg.sh "Hello"

|

||||

The first argument is: Hello

|

||||

```

|

||||

|

||||

## Task: Submit a job

|

||||

|

||||

Write a small scripts of your choice that require a long time to run and submit them using the script from the last task. Make sure that the scheduler is running in the background.

|

||||

|

||||

You can use the command `sleep` to simulate a job that needs long time to run.

|

||||

|

||||

Submit your job script multiple times and take a look at the terminal that is running the scheduler to make sure that the job scripts are running one after the other.

|

||||

|

||||

Verify the redirection of the standard output and standard error in the directory `logs`.

|

||||

Use the command that you did write in the last step to extract the two columns and save the output in a variable called `h_t` in the `(...)` block.

|

||||

1. Save the Python script into a file in an empty directory called `bash_python_harmony`.

|

||||

1. Use `poetry` to initialize an environment in the directory `bash_python_harmony`.

|

||||

1. Use `poetry` to add the package `matplotlib` to the environment.

|

||||

1. Use `poetry shell` to enter the environment.

|

||||

1. Run the script and check the generated PDF file `h_t.pdf`.

|

||||

|

|

|

|||

3

src/day_3/clis_of_the_day.md

Normal file

3

src/day_3/clis_of_the_day.md

Normal file

|

|

@ -0,0 +1,3 @@

|

|||

# CLIs of the day

|

||||

|

||||

## typos

|

||||

|

|

@ -1,240 +1 @@

|

|||

# Notes

|

||||

|

||||

## Shell tricks

|

||||

|

||||

### Expansion

|

||||

|

||||

```bash

|

||||

# mkdir -p dir/sub1 dir/sub2

|

||||

mkdir -p dir/sub{1,2}

|

||||

|

||||

# touch dir/sub1/file1.txt dir/sub1/file2.txt

|

||||

touch dir/sub1/file{1,2}.txt

|

||||

|

||||

# cp dir/sub1/file1.txt dir/sub1/file1.txt

|

||||

cp dir/sub1/file1.txt{,.bak}

|

||||

```

|

||||

|

||||

### Globbing

|

||||

|

||||

```bash

|

||||

# Print content of all files ending with `.sh`

|

||||

cat *.sh

|

||||

|

||||

# Move all files visible files and directories from dir1 to dir2

|

||||

mv dir1/* dir2

|

||||

|

||||

# Move all hidden files and directories from dir1 to dir2

|

||||

mv dir1/.* dir2

|

||||

|

||||

# Move all visible and hidden files and directories from dir1 to dir2

|

||||

# mv dir1/* dir1/.* dir2

|

||||

mv dir1/{,.}* dir2

|

||||

```

|

||||

|

||||

## Cargo

|

||||

|

||||

```bash

|

||||

# Install. openssl-devel needed for cargo-update

|

||||

sudo dnf install cargo openssl-devel

|

||||

|

||||

# To be able to run cargo install-update -a

|

||||

cargo install cargo-update

|

||||

|

||||

# Install crate (package)

|

||||

cargo install CRATENAME

|

||||

|

||||

# Update installed crates

|

||||

cargo install-update -a

|

||||

```

|

||||

|

||||

## bashrc

|

||||

|

||||

You write at the end of `~/.bashrc`.

|

||||

|

||||

### PATH

|

||||

|

||||

Add Cargo binaries to `PATH`:

|

||||

|

||||

```bash

|

||||

export PATH="$PATH:$HOME/.cargo/bin"

|

||||

```

|

||||

|

||||

### Alias

|

||||

|

||||

```bash

|

||||

alias rm="trash"

|

||||

```

|

||||

|

||||

## SSH

|

||||

|

||||

### Setup host

|

||||

|

||||

In `~/.ssh/config`

|

||||

|

||||

```

|

||||

Host HOST

|

||||

HostName SERVERIP

|

||||

User SERVERUSER

|

||||

```

|

||||

|

||||

### Generate key pair

|

||||

|

||||

```bash

|

||||

ssh-keygen -t ed25519 -C "COMMENT"

|

||||

```

|

||||

|

||||

Leave blank to take default for the prompt `Enter file in which to save the key (/home/USERNAME/.ssh/id_ed25519)`.

|

||||

|

||||

Then enter a passphrase for your key. **You should not leave it blank!**

|

||||

|

||||

### Add public key to server

|

||||

|

||||

```bash

|

||||

ssh-copy-id -i ~/.ssh/id_ed25519.pub HOST

|

||||

```

|

||||

|

||||

### Connect

|

||||

|

||||

```bash

|

||||

ssh HOST

|

||||

```

|

||||

|

||||

### Config on server

|

||||

|

||||

**Very important for security!** Only after adding the public key to the server!

|

||||

|

||||

> WARNING ⚠️ :

|

||||

>

|

||||

> Verify that you are only asked for the passphrase of the SSH key before continuing in this section!

|

||||

>

|

||||

> If you are asked for the password of the user on the server when connecting, then the authentication with a key did not work. Therefore, don't set `PasswordAuthentication no`! Fix the issue with the key authentication first. **Otherwise, you will be locked out of the server!** ⚠️

|

||||

|

||||

In `/etc/ssh/sshd_config` on the server:

|

||||

|

||||

Uncomment line with `PasswordAuthentication` and set it to `PasswordAuthentication no`

|

||||

|

||||

Save and exit, then run:

|

||||

|

||||

```bash

|

||||

sudo systemctl restart sshd

|

||||

```

|

||||

|

||||

If you are locked out after running this command, then you did not take the warning above seriously!

|

||||

|

||||

### Copy files

|

||||

|

||||

From server:

|

||||

|

||||

```bash

|

||||

scp HOST:SRC_PATH DEST_PATH

|

||||

```

|

||||

|

||||

To server:

|

||||

|

||||

```bash

|

||||

scp SRC_PATH HOST:DEST_PATH

|

||||

```

|

||||

|

||||

Options:

|

||||

|

||||

- `-r`, `--recursive`: For directories.

|

||||

|

||||

## Rsync

|

||||

|

||||

From server:

|

||||

|

||||

```bash

|

||||

rsync -Lahz HOST:SRC_PATH DEST_PATH

|

||||

```

|

||||

|

||||

To server:

|

||||

|

||||

```bash

|

||||

rsync -Lahz SRC_PATH HOST:DEST_PATH

|

||||

```

|

||||

|

||||

Options:

|

||||

|

||||

- `-a`, `--archive`: Set of useful options to preserve permissions, use recursive mode, etc.

|

||||

- `-h`, `--human-readable`: Output number in a human-readable format.

|

||||

- `-z`, `--compress`: Use compression.

|

||||

- `--partial`: Continue after interruption.

|

||||

- `-L`, `--copy-links`: Copy links.

|

||||

- `-v`, `--verbose`: Show more infos.

|

||||

- `--delete`: Delete files from `DEST_PATH` if they don't exist on `SRC_PATH` anymore. **Use with caution!!!**

|

||||

|

||||

## Systemd

|

||||

|

||||

Check status of a service:

|

||||

|

||||

```bash

|

||||

sudo systemctl status SERVICENAME

|

||||

```

|

||||

|

||||

Enable service:

|

||||

|

||||

```bash

|

||||

sudo systemctl enable SERVICENAME

|

||||

```

|

||||

|

||||

Start service:

|

||||

|

||||

```bash

|

||||

sudo systemctl start SERVICENAME

|

||||

```

|

||||

|

||||

Enable and start service at the same time:

|

||||

|

||||

```bash

|

||||

sudo systemctl enable --now SERVICENAME

|

||||

```

|

||||

|

||||

Disable service:

|

||||

|

||||

```bash

|

||||

sudo systemctl disable SERVICENAME

|

||||

```

|

||||

|

||||

Stop service:

|

||||

|

||||

```bash

|

||||

sudo systemctl stop SERVICENAME

|

||||

```

|

||||

|

||||

Disable and stop service at the same time:

|

||||

|

||||

```bash

|

||||

sudo systemctl disable --now SERVICENAME

|

||||

```

|

||||

|

||||

## Firewalld

|

||||

|

||||

Install and enable firewalld:

|

||||

|

||||

```bash

|

||||

sudo dnf install firewalld

|

||||

sudo systemctl enable --now firewalld

|

||||

```

|

||||

|

||||

View open ports and services:

|

||||

|

||||

```bash

|

||||

sudo firewall-cmd --list-all

|

||||

```

|

||||

|

||||

Open ports 80 (http) and 443 (https):

|

||||

|

||||

```bash

|

||||

sudo firewall-cmd --add-service http

|

||||

sudo firewall-cmd --add-service https

|

||||

sudo firewall-cmd --runtime-to-permanent

|

||||

```

|

||||

|

||||

or:

|

||||

|

||||

```bash

|

||||

sudo firewall-cmd --add-port 80/tcp

|

||||

sudo firewall-cmd --add-port 443/tcp

|

||||

sudo firewall-cmd --runtime-to-permanent

|

||||

```

|

||||

|

|

|

|||

|

|

@ -2,108 +2,22 @@

|

|||

|

||||

Do the tasks in the given order! They depend on each other.

|

||||

|

||||

## Task 1: Cargo

|

||||

## Task: SSH key

|

||||

|

||||

Use `cargo` to install the following crates:

|

||||

## Task: GitUI

|

||||

|

||||

- cargo-update

|

||||

- tealdeer

|

||||

## Task: Lazygit

|

||||

|

||||

It might take a long time to compile everything.

|

||||

In this task, you will learn using Lazygit.

|

||||

|

||||

Add `$HOME/.cargo/bin` to your `PATH`.

|

||||

|

||||

`cargo-update` should be installed to be able to run `cargo install-update -a` to update all installed crates. Try running the command. But you should not find any updates since you did just install the crates.

|

||||

|

||||

The crate `tealdeer` provides you with the program `tldr`.

|

||||

|

||||

Run `tldr --update`. Now run the following two commands:

|

||||

|

||||

```bash

|

||||

tldr dnf

|

||||

tldr apt

|

||||

```

|

||||

|

||||

It should be obvious to you what `tldr` does after you run the commands. Try it with other programs than `dnf` and `apt`!

|

||||

|

||||

## Task 2: SSH

|

||||

|

||||

Generate a SSH key pair and send me the public key per email: mo8it@proton.me

|

||||

|

||||

Enter a passphrase while generating the key pair!

|

||||

|

||||

Don't send me the private key!!! **You should never send your private SSH keys to anyone!**

|

||||

|

||||

The public key ends with `.pub`.

|

||||

|

||||

I will then append your public key to `~/.ssh/authorized_keys` on the server that we will use in the next tasks. After I add your public key, you will be able to login to the server and do the next tasks.

|

||||

|

||||

Create the file `~/.ssh/config` and add the server as a host with the name `linux-lab`.

|

||||

|

||||

Enter this IP: 45.94.58.19

|

||||

Enter this user: admin

|

||||

|

||||

After that I add you public key, connect to the server using the host name that you did enter in `~/.ssh/config` which should be `linux-lab`.

|

||||

|

||||

## Task 3: User creation

|

||||

|

||||

1. Create a user for you on the server after connecting with SSH. To do so, run:

|

||||

```bash

|

||||

sudo useradd USERNAME

|

||||

```

|

||||

|

||||

Replace `USERNAME` with your name.

|

||||

|

||||

1. Now set a password for the new user:

|

||||

|

||||

```bash

|

||||

sudo passwd USERNAME

|

||||

```

|

||||

1. For the new user to be able to use `sudo`, it has to be added to the `wheel` group:

|

||||

|

||||

```bash

|

||||

sudo usermod -aG wheel USERNAME

|

||||

```

|

||||

|

||||

`-aG` stands for _append to group(s)_.

|

||||

|

||||

(On Debian based distros, the user should be added to the `sudo` group instead of `wheel`.)

|

||||

|

||||

1. Now, change your user to the new user:

|

||||

|

||||

```bash

|

||||

sudo su USERNAME

|

||||

```

|

||||

|

||||

You will see that the user name did change in the prompt.

|

||||

|

||||

1. Run the following command for verification:

|

||||

|

||||

```bash

|

||||

whoami

|

||||

```

|

||||

|

||||

It should not output "admin"!

|

||||

|

||||

Yes, the command is called `whoami`. Linux is kind of philosophical 🤔

|

||||

|

||||

1. Now, verify that you can run `sudo` as the new user:

|

||||

|

||||

```bash

|

||||

sudo whoami

|

||||

```

|

||||

|

||||

You should see "root" as output because `sudo` runs a command as the `root` user.

|

||||

|

||||

1. `cd` to the home directory of the new user.

|

||||

1. Make sure that you are in the home directory of the new user! Run `pwd` to verify that you are NOT in `/home/admin`. **`PLEASE DON'T TOUCH /home/admin/.ssh`** ⚠️ . Now, create the directory `~/.ssh` in the home directory of the new user. Change the permissions of `~/.ssh` such that only the user has read, write and execution permissions. _group_ and _others_ should have no permissions for `~/.ssh`!

|

||||

1. Create the file `authorized_keys` inside `~/.ssh`. Only the user should have read and write permissions for the file. _group_ and _others_ should have no permissions for the file!

|

||||

1. Copy the content of your public key file (with `.pub` as extension) to this file. It should be one line! Then save the file.

|

||||

1. Logout from the server. Go to `~/.ssh/config` that you did write at the beginning of this task. Change the user for the host `linux-lab` from `admin` to `USERNAME` where `USERNAME` is the name of the new user that you did create on the server.

|

||||

1. Try to connect using the host name again. If you did everything right, you should be connected and be the user that you did create. Run `whoami` to verify that the output is not "admin".

|

||||

|

||||

## Task 4: File transfer

|

||||

|

||||

Use `scp` and then `rsync` to transfer the files that you did create during the course to the server `linux-lab`.

|

||||

|

||||

Do you notice any differences between the two commands?

|

||||

1. Install Lazygit. Follow the instructions for Fedora on [github.com/jesseduffield/lazygit](https://github.com/jesseduffield/lazygit). You might need to install `dnf-plugins-core` first to be able to add something via _COPR_ (Cool Other Package Repo).

|

||||

1. Put the files and directories that you did create during the course into a directory.

|

||||

1. Go into the directory.

|

||||

1. Run `lazygit`. It will initialize a git repository in this directory if none already exists. Confirm the initialization. Starting with now, every git operation should be done in Lazygit.

|

||||

1. Add everything as staged.

|

||||

1. Commit.

|

||||

1. Create a repository on [git.mo8it.com](https://git.mo8it.com).

|

||||

1. Add the new remote.

|

||||

1. Push to the new remote.

|

||||

1. Modify a file (or multiple files).

|

||||

1. Verify your changes in Lazygit, stage, commit and push.

|

||||

|

|

|

|||

64

src/day_4/clis_of_the_day.md

Normal file

64

src/day_4/clis_of_the_day.md

Normal file

|

|

@ -0,0 +1,64 @@

|

|||

# CLIs of the day

|

||||

|

||||

## cut

|

||||

|

||||

Demo file `demo.txt`:

|

||||

|

||||

```

|

||||

here,are,some

|

||||

comma,separated,values

|

||||

de mo,file,t x t

|

||||

```

|

||||

|

||||

```bash

|

||||

# Get the N-th column by using SEP as separator

|

||||

cut -d SEP -f N FILE

|

||||

```

|

||||

|

||||

Example:

|

||||

|

||||

```console

|

||||

$ cut -d "," -f 1 demo.txt

|

||||

here

|

||||

comma

|

||||

de mo

|

||||

```

|

||||

|

||||

You can also pipe into `cut` instead of specifying `FILE`.

|

||||

|

||||

## sed

|

||||

|

||||

```bash

|

||||

# Substitute

|

||||

sed 's/OLD/NEW/g' FILE

|

||||

|

||||

# Delete line that contains PATTERN

|

||||

sed '/PATTERN/d' FILE

|

||||

```

|

||||

|

||||

Example:

|

||||

|

||||

```console

|

||||

$ sed 's/values/strings/g' demo.txt

|

||||

here,are,some

|

||||

comma,separated,strings

|

||||

de mo,file,t x t

|

||||

$ sed '/separated/d' demo.txt

|

||||

here,are,some

|

||||

de mo,file,t x t

|

||||

```

|

||||

|

||||

You can also pipe into `sed` instead of specifying `FILE`.

|

||||

|

||||

When you specify `FILE`, you can use the option `-i` to operate _inplace_. This means that the file is modified directly.

|

||||

|

||||

## find

|

||||

|

||||

```bash

|

||||

# Find everything ending with `.sh` of type file `f` using globbing

|

||||

find . -type f -name '*.sh'

|

||||

|

||||

|

||||

# Using regex

|

||||

find . -type f -regex '.+\.sh'

|

||||

```

|

||||

|

|

@ -1,61 +1,120 @@

|

|||

# Notes

|

||||

|

||||

## Podman

|

||||

## Vim

|

||||

|

||||

- `:q`: Quit (**very important!**)

|

||||

- `:q!`: Quit without saving (**important!**)

|

||||

- `j`: Down

|

||||

- `k`: Up

|

||||

- `h`: Left

|

||||

- `l`: Right

|

||||

- `i`: Insert at left of cursor

|

||||

- `a`: Insert at right of cursor (append)

|

||||

- `I`: Insert at beginning of line

|

||||

- `A`: Append to end of line

|

||||

- `Esc`: Normal mode

|

||||

- `w`: Go to beginning of next word

|

||||

- `b`: Go to beginning of last word

|

||||

- `e`: Go to end of word

|

||||

- `gg`: Go to beginning of file

|

||||

- `G`: Go to end of file

|

||||

- `0`: Go to beginning of line

|

||||

- `$`: Go to end of line

|

||||

- `%`: Go to the other bracket

|

||||

- `u`: Undo

|

||||

- `Ctrl+r`: Redo

|

||||

- `:h`: Help

|

||||

- `:w`: Write buffer

|

||||

- `:wq`: Write buffer and exit

|

||||

- `/PATTERN`: Search

|

||||

- `n`: Next match

|

||||

- `N`: Previous match

|

||||

- `*`: Next match of the word under cursor

|

||||

- `o`: Add line below and enter insert mode

|

||||

- `O`: Add line above and enter insert mode

|

||||

- `v`: Start selection

|

||||

- `V`: Block selection

|

||||

- `y`: Yank (copy)

|

||||

- `p`: Paste

|

||||

- `x`: Delete one character

|

||||

- `dw`: Delete word

|

||||

- `dd`: Delete line

|

||||

- `D`: Delete util end of line

|

||||

- `cw`: Change word

|

||||

- `cc`: Change line

|

||||

- `C`: Change until end of line

|

||||

- `di(`: Delete inside bracket `(`. Can be used with other brackets and quotation marks.

|

||||

- `da(`: Same as above but delete around, not inside.

|

||||

- `ci(`: Change inside bracket `(`. Can be used with other brackets and quotation marks.

|

||||

- `ca(`: Same as above but delete around, not inside.

|

||||

- `:%s/OLD/NEW/g`: Substitute `OLD` with `NEW` in the whole file (with regex)

|

||||

- `:%s/OLD/NEW/gc`: Same as above but ask for confirmation for every substitution

|

||||

- `:N`: Go line number `N`

|

||||

- `.`: Repeat last action

|

||||

- `<` and `>`: Indentation

|

||||

- `q`: Start recording a macro (followed by macro character)

|

||||

|

||||

## Symlinks

|

||||

|

||||

```bash

|

||||

# Search for image

|

||||

podman search python

|

||||

# Soft link

|

||||

ln -s SRC_PATH DEST_PATH

|

||||

|

||||

# Pull image

|

||||

podman pull docker.io/library/python:latest

|

||||

|

||||

# See pulled images

|

||||

podman images

|

||||

|

||||

# Run container and remove it afterwards

|

||||

podman run -it --rm docker.io/library/python:latest bash

|

||||

|

||||

# Create network

|

||||

podman network create NETWORKNAME

|

||||

|

||||

# Create container

|

||||

podman create \

|

||||

--name CONTAINERNAME \

|

||||

--network NETWORKNAME \

|

||||

-e ENVVAR="Some value for the demo environment variable" \

|

||||

--tz local \

|

||||

docker.io/library/python:latest

|

||||

|

||||

# Start container

|

||||

podman start CONTAINERNAME

|

||||

|

||||

# Enter a running container

|

||||

podman exec -it CONTAINERNAME bash

|

||||

|

||||

# Stop container

|

||||

podman stop CONTAINERNAME

|

||||

|

||||

# Generate systemd files

|

||||

podman generate systemd --new --files --name CONTAINERNAME

|

||||

|

||||

# Create directory for user's systemd services

|

||||

mkdir -p ~/.config/systemd/user

|

||||

|

||||

# Place service file

|

||||

mv container-CONTAINERNAME.service ~/.config/systemd/user

|

||||

|

||||

# Activate user's service (container)

|

||||

systemctl --user enable --now container-CONTAINERNAME

|

||||

# Hard link

|

||||

ln SRC_PATH DEST_PATH

|

||||

```

|

||||

|

||||

Keep user's systemd services live after logging out:

|

||||

## Python scripting

|

||||

|

||||

```bash

|

||||

sudo loginctl enable-linger USERNAME

|

||||

```python-repl

|

||||

>>> import subprocess

|

||||

>>> proc = subprocess.run(["ls", "student"])

|

||||

(...)

|

||||

CompletedProcess(args=['ls', '/home/student'], returncode=0)

|

||||

>>> proc.returncode

|

||||

0

|

||||

>>> proc = subprocess.run("ls /home/student", shell=True)

|

||||

(...)

|

||||

CompletedProcess(args='ls /home/student', returncode=0)

|

||||

>>> proc = subprocess.run("ls /home/student", shell=True, capture_output=True, text=True)

|

||||

CompletedProcess(args='ls /home/student', returncode=0, stdout='(...)', stderr='')

|

||||

>>> proc.stdout

|

||||

(...)

|

||||

>>> proc.stderr

|

||||

>>> proc = subprocess.run("ls /home/nonexistent", shell=True, capture_output=True, text=True)

|

||||

CompletedProcess(args='ls /home/nonexistent', returncode=2, stdout='', stderr="ls: cannot access '/home/nonexistent': No such file or directory\n")

|

||||

>>> proc = subprocess.run("ls /home/nonexistent", shell=True, capture_output=True, text=True, check=True)

|

||||

---------------------------------------------------------------------------

|

||||

CalledProcessError Traceback (most recent call last)

|

||||

Input In [8], in <cell line: 1>()

|

||||

----> 1 subprocess.run("ls /home/nonexistent", shell=True, capture_output=True, text=True, check=True)

|

||||

|

||||

File /usr/lib64/python3.10/subprocess.py:524, in run(input, capture_output, timeout, check, *popenargs, **kwargs)

|

||||

522 retcode = process.poll()

|

||||

523 if check and retcode:

|

||||

--> 524 raise CalledProcessError(retcode, process.args,

|

||||

525 output=stdout, stderr=stderr)

|

||||

526 return CompletedProcess(process.args, retcode, stdout, stderr)

|

||||

|

||||

CalledProcessError: Command 'ls /home/nonexistent' returned non-zero exit status 2.

|

||||

```

|

||||

|

||||

Options:

|

||||

Most used modules when writing system scripts with Python: `pathlib`, `os`, `shutil`.

|

||||

|

||||

- `-v`, `--volume`: `SRC_PATH:DEST_PATH:L`. Label should be one of `z`, `z,ro`, `Z` or `Z,ro`.

|

||||

- `--label "io.containers.autoupdate=registry"` for `podman auto-update`

|

||||

- `-p`, `--publish`: `SERVER_PORT:CONTAINER_PORT`

|

||||

```python-repl

|

||||

>>> from pathlib import Path

|

||||

>>> p = Path.home() / "day_5"

|

||||

PosixPath('/home/student/day_5')

|

||||

>>> p.is_dir()

|

||||

(...)

|

||||

>>> p.is_file()

|

||||

(...)

|

||||

>>> p.exists()

|

||||

(...)

|

||||

>>> p.chmod(0o700)

|

||||

(...)

|

||||

>>> rel = Path(".")

|

||||

PosixPath('.')

|

||||

>>> rel.resolve()

|

||||

PosixPath('/home/student/(...)')

|

||||

```

|

||||

|

|

|

|||

|

|

@ -1,195 +1,124 @@

|

|||

# Tasks

|

||||

|

||||

## Compilation in containers

|

||||

## Task: Vim macros

|

||||

|

||||

> 📍 : This task should be done on the Contabo server after connecting with SSH to the user that you did create yesterday on the server (not admin).

|

||||

In this task, we will learn about the power of macros in Vim.

|

||||

|

||||

We want to practice compilation and containers, so let's compile in a container!

|

||||

1. Visit [this URL](https://www.randomlists.com/random-names?qty=500), select the generated 500 names with the mouse and copy them.

|

||||

1. Create a new text file and open it with `nvim`.

|

||||

1. Paste the names into the file. You should see 500 lines with a name in each line.

|

||||

1. Record a macro that changes a line in the form `FIRST_NAME LAST_NAME` to `("FIRST_NAME", "LAST_NAME"),`.

|

||||

1. Run the macro on all lines.

|

||||

1. Now go to the beginning of the file (`gg`) and add this line as a first line: `names = [`

|

||||

1. Go to the end of the file (`G`) and add this line as last line: `]`

|

||||

|

||||

In this task, we want to compile the program `tmate`.

|

||||

Congratulations, you did just convert the names into a form that could be directly used by a Python program! It is a list of tuples now.

|

||||

|

||||

1. Start an Ubuntu container with `podman run -it --rm --name tmate-compiler ubuntu:latest bash`.

|

||||

1. Go to the [website of `tmate`](https://tmate.io/) and find out how to compile from source (there are instructions for compiling on Ubuntu).

|

||||

1. Follow the compilation instructions in the container.

|

||||

1. After compilation, you will find the binary `tmate` in the directory of the git repository.

|

||||

1. Don't exit the container yet, otherwise you will lose what you have done in it. Now open a new terminal (tab) and copy the binary `tmate` from the container to the directory `bin` in your home directory. Use the command `podman cp CONTAINERNAME:SRC_PATH DESTINATION_PATH`.

|

||||

1. Verify that the binary `tmate` was copied to `DESTINATION_PATH` and then exit the container in the first terminal (tab).

|

||||

## Task: Job scheduler

|

||||

|

||||

Now write a script called `compile_tmate.sh` that automates what you have done in the container to compile `tmate`. Just copy all the commands that you used in the container to a script.

|

||||

> Warning ⚠️ : This task is not an easy task. Don't give up quickly and ask for help if you don't get further!

|

||||

|

||||

Add to the end of the script `mv PATH_TO_TMATE_BINARY_IN_CONTAINER /volumes/bin` to copy the binary to the directory `/volumes/bin` after compilation.

|

||||

In this task, we want to write our own job scheduler.

|

||||

|

||||

Create a directory called `scripts` and put the script in it.

|

||||

Understanding how job schedulers work is important when you are working on a computer cluster.

|

||||

|

||||

Now write a second script in the parent directory of the directory `scripts`. The second script should automate creating the container that runs the first script.

|

||||

Computer clusters are shared by many users. Therefore, running jobs on a cluster has to be scheduled to make sure that the resources are shared probably.

|

||||

|

||||

Do the following in the second script:

|

||||

In this task, we will keep it simple. No aspects of multiple users or any optimizations.

|

||||

|

||||

1. Check if `scripts/compile_tmate.sh` does NOT exist. In this case you should print a useful message that explains why the script terminates and then exit with error code 1.

|

||||

1. Make sure that `scripts/compile_tmate.sh` is executable for the user.

|

||||

1. Create a directory called `bin` (next to the directory `scripts`) if it does not already exist.

|

||||

1. Use the following snippet:

|

||||

We want to be able to submit a job as a single script (without any dependencies). The submitted scripts should run one after the another to save CPU usage for example.

|

||||

|

||||

We will use the program `inotifywait`. This program can monitor a directory and notify on changes within this directory.

|

||||

|

||||

1. Find out which package installs `inotifywait` and install it.

|

||||

1. Read the manual of `inotifywait` for a better understanding of what it does.

|

||||

1. Find out how to tell `inotifywait` to keep monitoring a directory and not exit after the first event.

|

||||

1. Find out what events mean in the context of `inotifywait`.

|

||||

1. Create a new directory called `jobs` to be monitored.

|

||||

1. Create a new directory called `logs` that will be used later.

|

||||

1. Run `inotifywait` while telling it to monitor the directory `jobs`. Leave the command running in a terminal and open a second terminal (tab) to continue the work in.

|

||||

1. Create a file **outside** of the directory `jobs` and then copy it to the directory `jobs`.

|

||||

1. Go back to the first terminal and see the output of `inotifywait` was.

|

||||

1. Based on the output, choose an event that you want to listen to with `inotifywait` that tells you when a file is _completely_ added to the directory `jobs`. Use the manual to read more about specific events.

|

||||

1. Find an option that lets you tell `inotifywait` to only notify when the chosen event occurs.

|

||||

1. Find an option that lets you format the output of the notification of `inotifywait`. Since we only listen on one event and monitor only one directory, an output that shows only the name of the new file should be enough.

|

||||

1. Enter the command that you have until now in a script. Now extend it by using a `while` loop that continuously listens on the notifications of `inotifywait`. Use the following snippet while replacing the sections with `(...)`:

|

||||

```bash

|

||||

podman run -it --rm \

|

||||

--name tmate-compiler \

|

||||

-v ./scripts:/volumes/scripts:Z,ro \

|

||||

-v ./bin:/volumes/bin:Z \

|

||||

docker.io/library/ubuntu:latest \

|

||||

/volumes/scripts/compile_tmate.sh

|

||||

inotifywait (...) | while read FILENAME

|

||||

do

|

||||

(...)

|

||||

done

|

||||

```

|

||||

|

||||

It creates a container that runs the script `compile_tmate.sh` and is removed afterwards (because of `--rm`).

|

||||

|

||||

The `scripts` directory is mounted to be able to give the container access to the script `compile_tmate.sh`. The directory is mounted as _read only_ (`ro`) because it will not be modified.

|

||||

|

||||

The `bin` directory is mounted to be able to transfer the binary into it before the container exits.

|

||||

|

||||

After running the second script, you should see the container compiling and then exiting. At the end, you should find the binary `tmate` in the `bin` directory.

|

||||

|

||||

Now that you have the program `tmate`, find out what it does! Try it with a second person.

|

||||

1. After a notification, the body of the `while` loop should first print the name of the script that was added. From now on, we only want to add scripts to the `jobs` directory.

|

||||

1. After printing the script name, run the script!

|

||||

1. Save the standard output and standard error of the script into two separate files in the `logs` directory. If the name of the script is `job.sh` for example, then the output should be in the files `logs/job.sh.out` and `logs/job.sh.err`.

|

||||

|

||||

##### Tipps:

|

||||

|

||||

- On Debian based distributions like Ubuntu, the package manager is `apt`. Before that you can install any packages with `apt`, you have to run `apt update`. This does not run system updates like `dnf upgrade`. `apt update` does only synchronize the repositories which is needed before installations.

|

||||

- Test if a file exists in bash:

|

||||

- Take a look at the examples from the sections of this day.

|

||||

- Take care of permissions.

|

||||

|

||||

If you have extra time, read about the command `screen` in the internet. `screen` allows you to run commands in the background. This way, you don't need two terminals.

|

||||

|

||||

## Task: Job submitter

|

||||

|

||||

In this task we will write a small script that lets us submit a job script to the scheduler from the last task.

|

||||

|

||||

The script should take the path to the job script as a single required argument.

|

||||

|

||||

The script should then copy the job script to the directory `jobs` while adding the time and date to the beginning of the name of the job script in the `jobs` directory.

|

||||

|

||||

Read the manual of the command `date` to know how to get the time and date in the following format: `2022-08-22T20:00:00+00:00`.

|

||||

|

||||

If the name of the job script is `job.sh` for example, the job script should be named `2022-08-22T20:00:00+00:00_job.sh` in the `jobs` directory.

|

||||

|

||||

Use variables to write the script to make it more understandable.

|

||||

|

||||

#### Help

|

||||

|

||||

To save the output of a command into a variable, use you have to use the following syntax:

|

||||

|

||||

```bash

|

||||

if [ -f FILE_PATH ]

|

||||

then

|

||||

(...)

|

||||

fi

|

||||

DATE=$(date ...)

|

||||

```

|

||||

|

||||

Replace `(...)` with your code. For more information on the option `-f` and other useful options for bash conditions, read the man page of the program `test`: `man test`.

|

||||

Replace `...` with your code.

|

||||

|

||||

To read the `n`-th argument that is provided to a script you write, you have to use `$n`.

|

||||

|

||||

Example script called `arg.sh`:

|

||||

|

||||

To test if a file does NOT exist, replace `-f` with `! -f`.

|

||||

- Exit a bash script with error code 1:

|

||||

```bash

|

||||

exit 1

|

||||

#!/usr/bin/bash

|

||||

|

||||

echo "The first argument is: $1"

|

||||

```

|

||||

|

||||

## Task: Static website

|

||||

When you run this script with an argument:

|

||||

|

||||

> 📍 : In this task, you should connect as the user `admin` to the Contabo server. **Don't do this task as the user that you did create on the server!** ⚠️

|

||||

|

||||

> 📍 : Starting with this task: Asking you to replace `N` means to enter the number that you are using in the URL `ttydN.mo8it.com`.

|

||||

|

||||

In this task, you will host a static website which is a website that does not have a backend. A static website is just a set of HTML, CSS (and optionally JavaScript) files.

|

||||

|

||||

To host the website, we need a web server. In this task, we will use the Nginx web server.

|

||||

|

||||

Create a directory `~/nginxN` (replace `N`) with two directories in it: `website` and `conf`.

|

||||

|

||||

Place these two files:

|

||||

|

||||

1. `~/nginxN/conf/nginxN.jinext.xyz.conf` (replace `N`):

|

||||

|

||||

```

|

||||

server {

|

||||

listen 80;

|

||||

server_name nginxN.jinext.xyz;

|

||||

|

||||

location / {

|

||||

root /volumes/website;

|

||||

index index.html;

|

||||

}

|

||||

}

|

||||

```console

|

||||

$ ./arg.sh "Hello"

|

||||

The first argument is: Hello

|

||||

```

|

||||

|

||||

Replace `N` also in `server_name`!

|

||||

## Task: Submit a job

|

||||

|

||||

1. `~/nginxN/website/index.html` (replace `N`):

|

||||

Write a small scripts of your choice that require a long time to run and submit them using the script from the last task. Make sure that the scheduler is running in the background.

|

||||

|

||||

```

|

||||

<!doctype html>

|

||||

<html lang="en">

|

||||

<head>

|

||||

<meta charset="utf-8">

|

||||

<meta name="viewport" content="width=device-width, initial-scale=1">

|

||||

<title>Demo</title>

|

||||

</head>

|

||||

<body>

|

||||

<h1>Hello world!</h1>

|

||||

</body>

|

||||

</html>

|

||||

```

|

||||

You can use the command `sleep` to simulate a job that needs long time to run.

|

||||

|

||||

Create a Nginx container with the following options:

|

||||

Submit your job script multiple times and take a look at the terminal that is running the scheduler to make sure that the job scripts are running one after the other.

|

||||

|

||||

- Name: `nginxN`. Replace `N`!

|

||||

- Timezone `tz`: `local`.

|

||||

- Network: `traefik`.

|

||||

- Volumes:

|

||||

- `~/nginxN/website:/volumes/website` with labels `Z,ro`.

|

||||

- `~/nginxN/conf:/etc/nginx/conf.d` with labels `Z,ro`.

|

||||

- Label: `io.containers.autoupdate=registry`

|

||||

- Image: `docker.io/library/nginx:alpine`

|

||||

|

||||

Create the systemd file for the container above.

|

||||

|

||||

Move the systemd file to `~/.config/systemd/user`.

|

||||

|

||||

Enable and start the container as user services with `systemctl --user enable --now container-nginxN`. Replace `N`!

|

||||

|

||||

Visit [https://nginxN.jinext.xyz](https://nginxN.jinext.xyz) to see if everything did work! Replace `N`!

|

||||

|

||||

Now, you can edit `index.html` and add your own HTML content.

|

||||

|

||||

You can also add more files to the directory `website`. If you add a file `test.html` for example, then you should see it under [https://nginxN.jinext.xyz/test](https://nginxN.jinext.xyz/test).

|

||||

|

||||

## Task: Nextcloud

|

||||

|

||||

> 📍 : In this task, you should connect as the user `admin` to the Contabo server. **Don't do this task as the user that you did create on the server!** ⚠️

|

||||

|

||||

In this task you will deploy your own cloud on the server: Nextcloud!

|

||||

|

||||

To do so, we will install Nextcloud as a container using `podman`.

|

||||

|

||||

To connect as `admin` again, change the user for the host `linux-lab` in `~/.ssh/config` back to `admin` or use `ssh admin@linux-lab` instead of only `ssh linux-lab`.

|

||||

|

||||

You can find more information about the Nextcloud container here: https://hub.docker.com/\_/nextcloud

|

||||

|

||||

Create a directory called `nextcloudN` (replace `N`) in the home directory of the user `admin`.

|

||||

|

||||

Create a directory called `nextcloudN-db` (replace `N`) for the database container.

|

||||

|

||||

Create a container for the database with the following options:

|

||||

|

||||

- Container name: `nextcloudN-db`. Replace `N`!

|

||||

- Timezone `tz`: `local`

|

||||

- Network: `traefik`

|

||||

- Volume: Mount the directory `nextcloudN-db` (replace `N`) that you did create into `/var/lib/postgresql/data` in the container. Use the label `Z`!

|

||||

- The following environment variables:

|

||||

- `POSTGRES_DB=nextcloud`

|

||||

- `POSTGRES_USER=nextcloud`

|

||||

- `POSTGRES_PASSWORD=DB_PASSWORD`. Replace `DB_PASSWORD` with a good password!

|

||||

- Label: `io.containers.autoupdate=registry`

|

||||

- Image: `docker.io/library/postgres:alpine`

|

||||

|

||||

Create the actual Nextcloud container with the following options:

|

||||

|

||||

- Container name: `nextcloudN`. `N` at the end stands for the number that you are using in the url to connect to the browser terminal `ttydN.mo8it.com`.

|

||||

- Timezone `tz`: `local`

|

||||

- Network: `traefik`

|

||||

- Volume: Mount the directory `nextcloudN` that you did create into `/var/www/html` in the container. Use the label `Z`!

|

||||

- The same environment variables as for the other container! Use the same `DB_PASSWORD`. Add one more environment variable:

|

||||

- `POSTGRES_HOST=nextcloudN-db`. Replace `N`!

|

||||

- Label: `io.containers.autoupdate=registry`

|

||||

- Image: `docker.io/library/nextcloud:24-apache`

|

||||

|

||||

Create the systemd files for the two containers above.

|

||||

|

||||

Move the systemd files to `~/.config/systemd/user`.

|

||||

|

||||

Enable and start the two containers as user services with `systemctl --user enable --now container-nextcloudN-db` and `systemctl --user enable --now container-nextcloudN`. Replace `N`!

|

||||

|

||||

Visit [https://nextcloudN.jinext.xyz](https://nextcloudN.jinext.xyz) to see if everything did work! Replace `N`!

|

||||

Verify the redirection of the standard output and standard error in the directory `logs`.

|

||||

|

||||

## Task: Vim game

|

||||

|

||||

In this task, we are going to play a game! 🎮️

|

||||

|

||||

The game is an educational game that lets you learn the very basics of the navigation in Vim/Neovim.

|

||||

The game is an educational game that lets you learn the very basics of the navigation in Vim.

|

||||

|

||||

Play the game on this website: [https://vim-adventures.com](https://vim-adventures.com/)

|

||||

|

||||

## Task: Python rewrite

|

||||

|

||||

Choose one of the scripts that you have written during the course and rewrite it in Python!

|

||||

|

|

|

|||

1

src/day_5/clis_of_the_day.md

Normal file

1

src/day_5/clis_of_the_day.md

Normal file

|

|

@ -0,0 +1 @@

|

|||

# CLIs of the day

|

||||

|

|

@ -1,254 +1,216 @@

|

|||

# Notes

|

||||

|

||||

## Neovim

|

||||

## SSH

|

||||

|

||||

- `:q`: Quit (**very important!**)

|

||||

- `:q!`: Quit without saving (**important!**)

|

||||

- `j`: Down

|

||||

- `k`: Up

|

||||

- `h`: Left

|

||||

- `l`: Right

|

||||

- `i`: Insert at left of cursor

|

||||

- `a`: Insert at right of cursor (append)

|

||||

- `I`: Insert at beginning of line

|

||||

- `A`: Append to end of line

|

||||

- `Esc`: Normal mode

|

||||

- `w`: Go to beginning of next word

|

||||

- `b`: Go to beginning of last word

|

||||

- `e`: Go to end of word

|

||||

- `gg`: Go to beginning of file

|

||||

- `G`: Go to end of file

|

||||

- `0`: Go to beginning of line

|

||||

- `$`: Go to end of line

|

||||

- `%`: Go to the other bracket

|

||||

- `u`: Undo

|

||||

- `Ctrl+r`: Redo

|

||||

- `:h`: Help

|

||||

- `:w`: Write buffer

|

||||

- `:wq`: Write buffer and exit

|

||||

- `/PATTERN`: Search

|

||||

- `n`: Next match

|

||||

- `N`: Previous match

|

||||

- `*`: Next match of the word under cursor

|

||||

- `o`: Add line below and enter insert mode

|

||||

- `O`: Add line above and enter insert mode

|

||||

- `v`: Start selection

|

||||

- `V`: Block selection

|

||||

- `y`: Yank (copy)

|

||||

- `p`: Paste

|

||||

- `x`: Delete one character

|

||||

- `dw`: Delete word

|

||||

- `dd`: Delete line

|

||||

- `D`: Delete util end of line

|

||||

- `cw`: Change word

|

||||

- `cc`: Change line

|

||||

- `C`: Change until end of line

|

||||

- `di(`: Delete inside bracket `(`. Can be used with other brackets and quotation marks.

|

||||

- `da(`: Same as above but delete around, not inside.

|

||||

- `ci(`: Change inside bracket `(`. Can be used with other brackets and quotation marks.

|

||||

- `ca(`: Same as above but delete around, not inside.

|

||||

- `:%s/OLD/NEW/g`: Substitute `OLD` with `NEW` in the whole file (with regex)

|

||||

- `:%s/OLD/NEW/gc`: Same as above but ask for confirmation for every substitution

|

||||

- `:N`: Go line number `N`

|

||||

- `.`: Repeat last action

|

||||

- `<` and `>`: Indentation

|

||||

- `q`: Start recording a macro (followed by macro character)

|

||||

### Setup host

|

||||

|

||||

## Regular expressions (Regex)

|

||||

In `~/.ssh/config`

|

||||

|

||||

Can be used for example with `grep`, `rg`, `find`, `fd`, `nvim`, etc.

|

||||

```

|

||||

Host HOST

|

||||

HostName SERVERIP

|

||||

User SERVERUSER

|

||||

```

|

||||

|

||||

- `^`: Start of line

|

||||

- `$`: End of line

|

||||

- `()`: Group

|

||||

- `[abcd]`: Character set, here `a` until `d`

|

||||

- `[a-z]`: Character range, here `a` until `z`

|

||||

- `[^b-h]`: Negated character range, here `b` to `h`

|

||||

- `.`: Any character

|

||||

- `.*`: 0 or more characters

|

||||

- `.+`: 1 or more characters

|

||||

- `\w`: Letter or number

|

||||

- `\W`: Not letter nor number

|

||||

- `\d`: Digit

|

||||

- `\D`: Not digit

|

||||

- `\s`: Whitespace

|

||||

- `\S`: Not whitespace

|

||||

|

||||

## More tools

|

||||

|

||||

### Curl

|

||||

|

||||

We did use `curl`, but not yet for downloading.

|

||||

### Generate key pair

|

||||

|

||||

```bash

|

||||

# Download file into current directory while using the default name

|

||||

curl -L LINK_TO_FILE -O

|

||||

|

||||

# Download file while giving the path to save the file into

|

||||

# (notice that we are using small o now, not O)

|

||||

curl -L LINK_TO_FILE -o PATH

|

||||

ssh-keygen -t ed25519 -C "COMMENT"

|

||||

```

|

||||

|

||||

`-L` tells `curl` to follow redirections (for example from `http` to `https`).

|

||||

Leave blank to take default for the prompt `Enter file in which to save the key (/home/USERNAME/.ssh/id_ed25519)`.

|

||||

|

||||

### cut

|

||||

Then enter a passphrase for your key. **You should not leave it blank!**

|

||||

|

||||

Demo file `demo.txt`:

|

||||

|

||||

```

|

||||

here,are,some

|

||||

comma,separated,values

|

||||

de mo,file,t x t

|

||||

```

|

||||

### Add public key to server

|

||||

|

||||

```bash

|

||||

# Get the N-th column by using SEP as separator

|

||||

cut -d SEP -f N FILE

|

||||

ssh-copy-id -i ~/.ssh/id_ed25519.pub HOST

|

||||

```

|

||||

|

||||

Example:

|

||||

|

||||

```console

|

||||

$ cut -d "," -f 1 demo.txt

|

||||

here

|

||||

comma

|

||||

de mo

|

||||

```

|

||||

|

||||

You can also pipe into `cut` instead of specifying `FILE`.

|

||||

|

||||

### sed

|

||||

### Connect

|

||||

|

||||

```bash

|

||||

# Substitute

|

||||

sed 's/OLD/NEW/g' FILE

|

||||

|

||||

# Delete line that contains PATTERN

|

||||

sed '/PATTERN/d' FILE

|

||||

ssh HOST

|

||||

```

|

||||

|

||||

Example:

|

||||

### Config on server